Driving product clarity, reducing migration failure, and creating scalable documentation systems for Atlassian Cloud

Scaling Trust: Building Soft Limits for Jira Cloud Migrations

Context

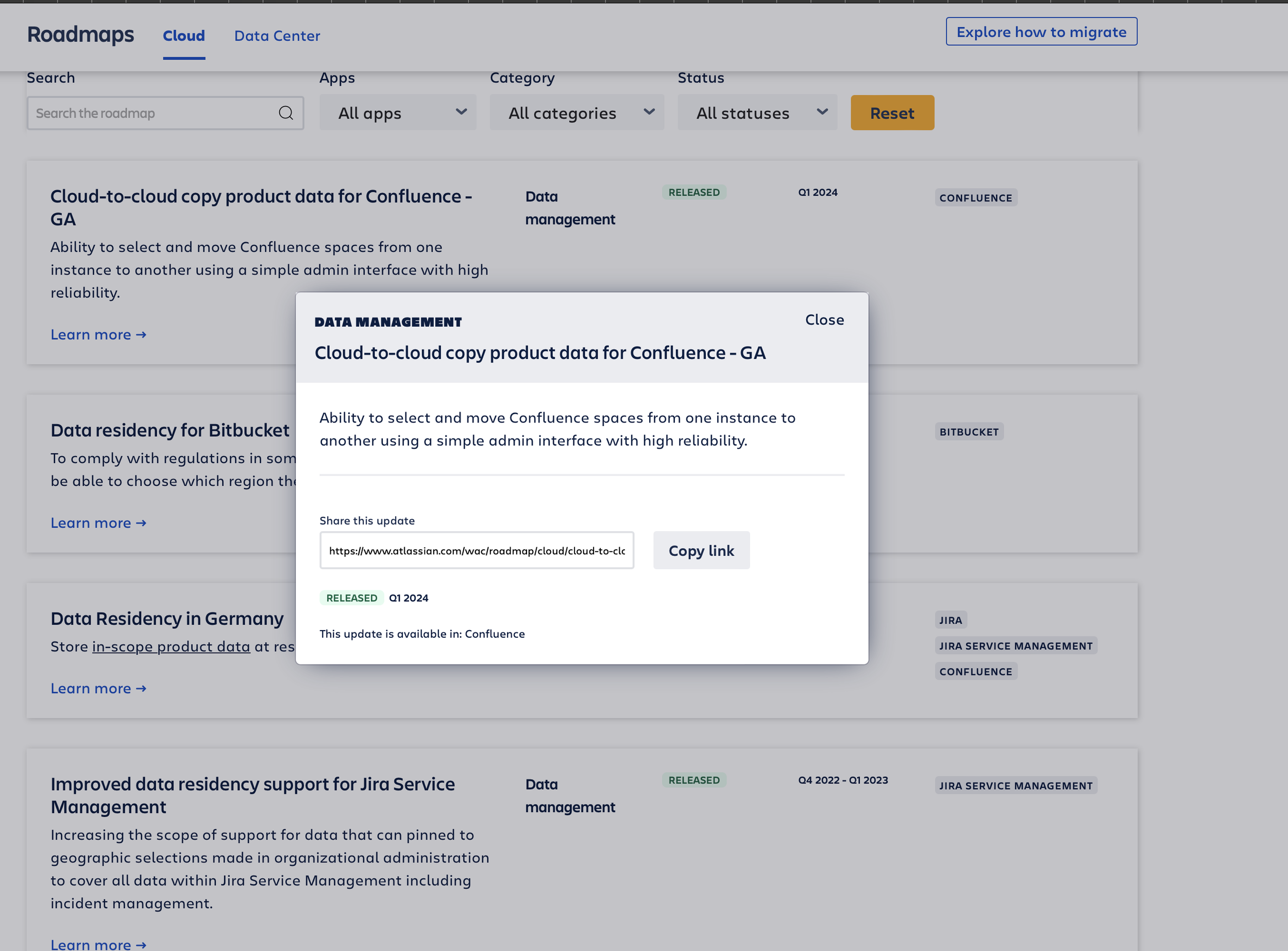

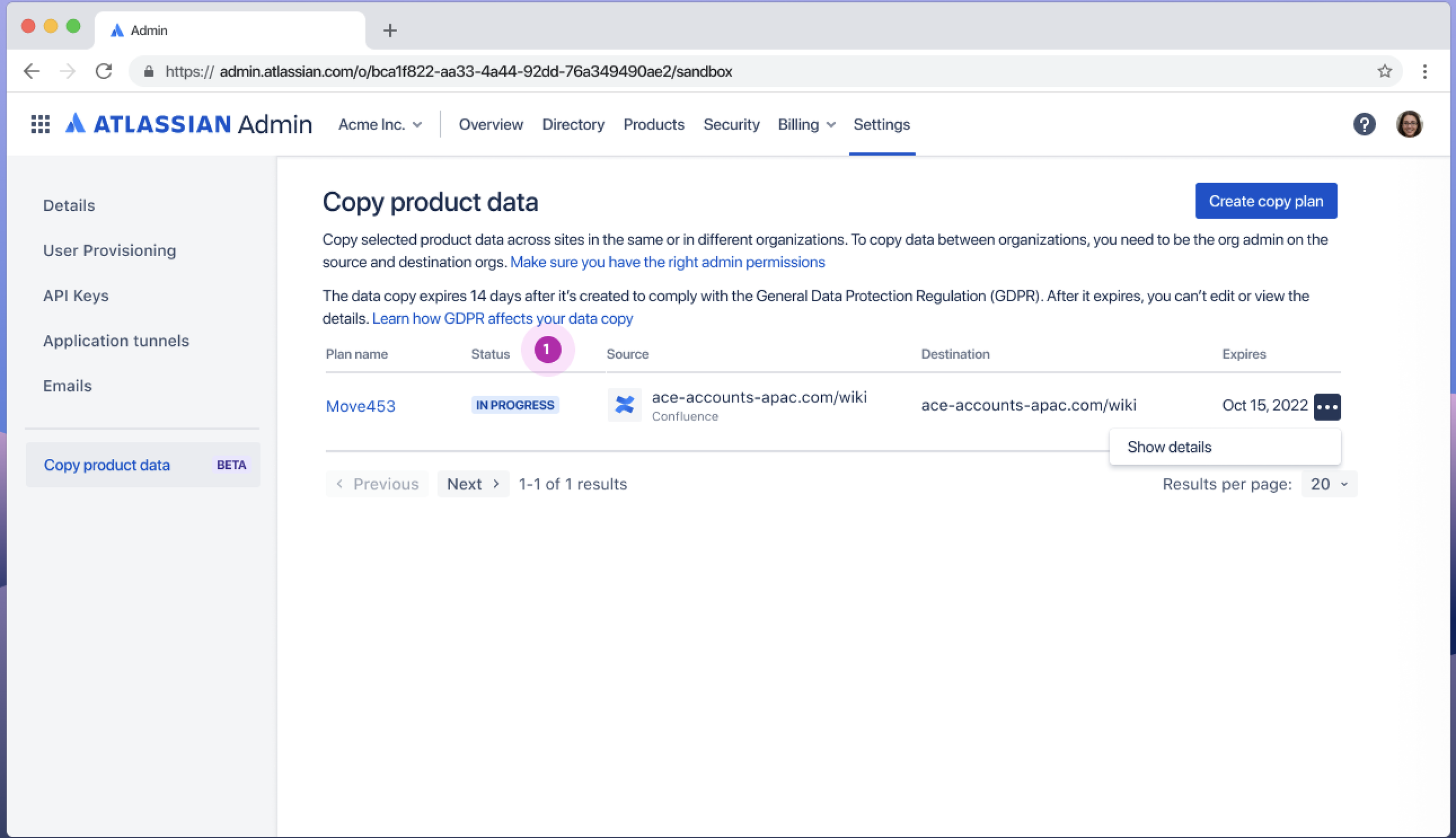

Jira Cloud-to-Cloud (C2C) is an Atlassian cloud product which helps customers move their product data within cloud from one site to another.

When I joined, the product was still in Beta but was already being positioned as the recommended migration method for both small and large-scale enterprise use cases.

This project focused on improving migration clarity, user confidence, and support readiness for Jira C2C during this transition phase.

-

I was the first content designer to lead creating soft limits in Atlassian’s cloud offerings. My responsibilities included:

Leading strategy, design, and rollout of the guardrails framework

Coordinating across product, engineering, legal, and support

Synthesizing insights from research and technical sources

Authoring internal and external documentation

-

Product Design, Product Management, Engineering, Legal, Customer Support

-

3 months

-

Jira C2C admins assessing migration options

Support teams handling queries

Customer-facing teams guiding customers on product selection

Problem Statement

Jira C2C was being positioned as a scalable migration tool while still in active development, with limited and evolving capabilities.

This created a growing gap between what users expected the product to handle and what it could reliably support in real world migrations.

As a result, users were making high risk migration decisions without clear visibility into product limitations, and support teams lacked a consistent way to guide them.

The core question became:

How might we set clearer expectations about Jira C2C’s capabilities and risks, so users can plan better and support teams can guide them more effectively?

Goal

Help customers use Jira C2C more confidently by:

clearly explaining what the product can and cannot do

reducing migration failures through better pre-migration guidance

equipping support teams with unified and clear messaging

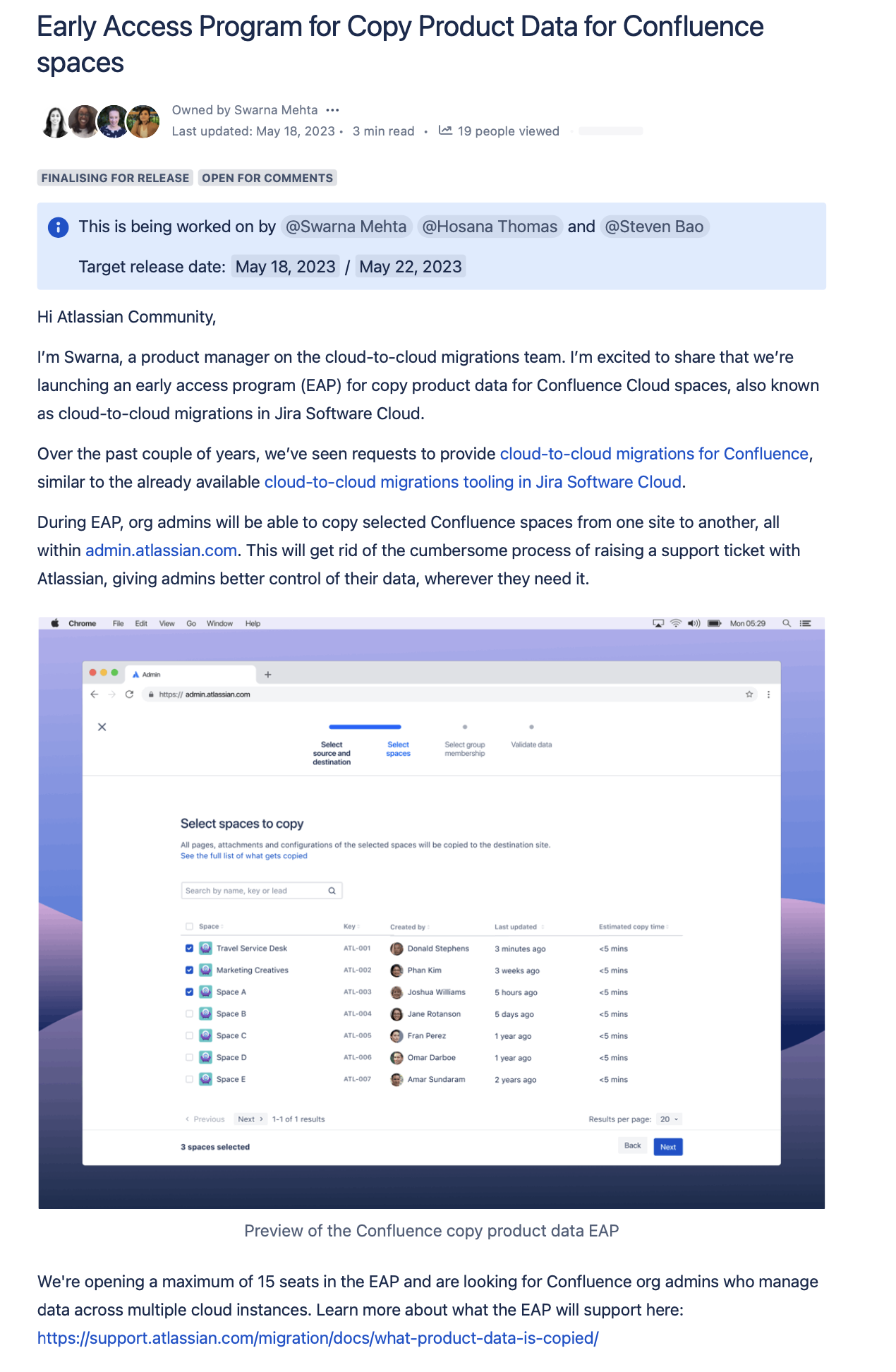

building a framework that could be reused for other Atlassian Cloud products

aligning with Atlassian’s north star of cloud growth through successful enterprise migrations

Approach

All the user issues needed to be tackled individually with deep design, engineering, and support collaboration.

Instead of treating documentation as a downstream fix, I approached this as a system design problem. The intent was to build a common understanding of where migrations were breaking down, and to use that shared view to design a content strategy that could guide both user decisions and internal product direction.

This allowed us to move from reacting to isolated incidents to designing a unified, proactive approach to migration risk.

What I Delivered

User-facing documentation explaining Jira C2C capabilities and limitations

A shared structure for defining and documenting migration risks

Internal support enablement documentation and FAQs

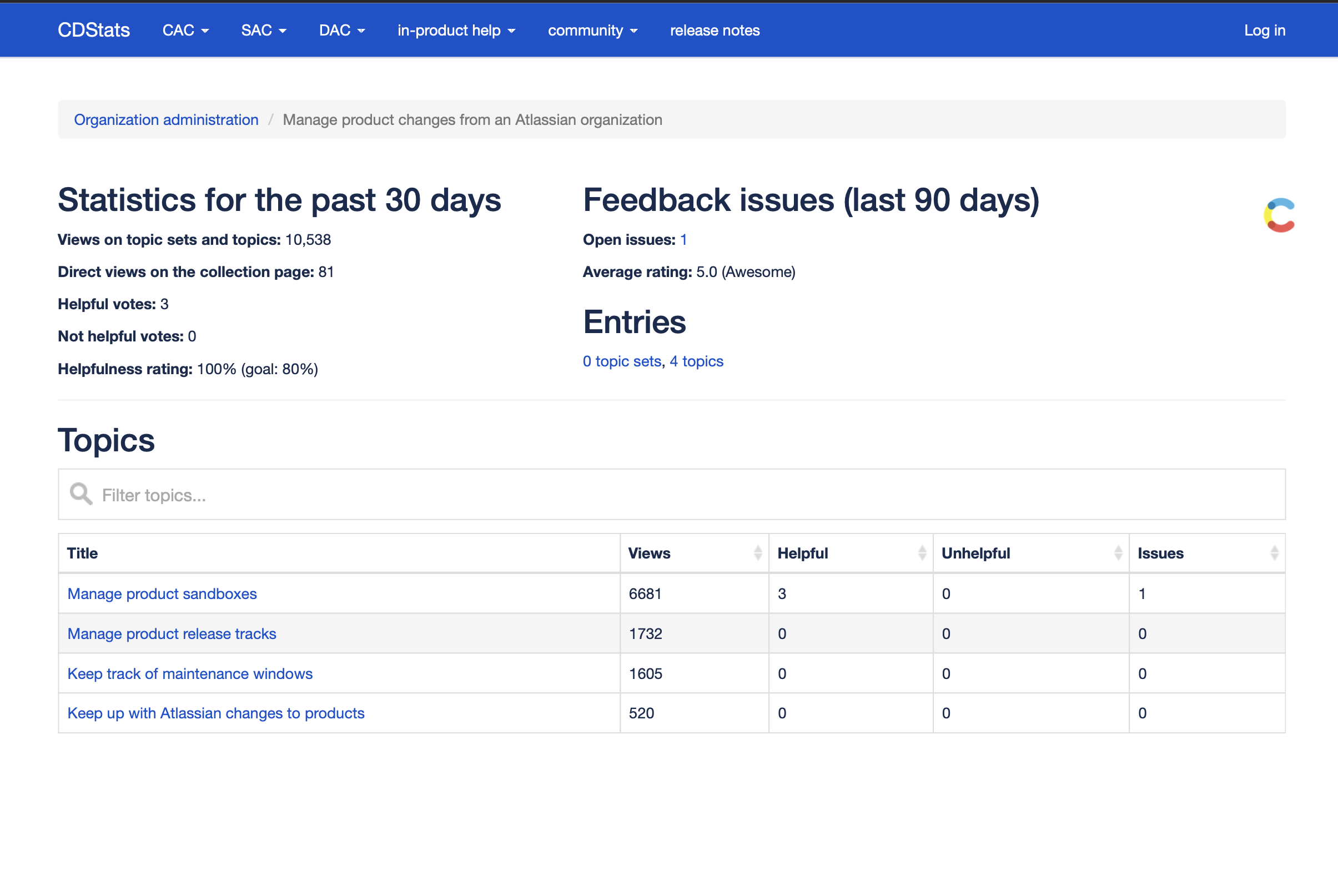

A living Confluence knowledge base used by product, engineering, and support

Updated UI and documentation with clearer Beta messaging

Solution overview

I split the content strategy into three core aspects:

Clarity in choice: Enabled users to choose the right tool based on their data type and size. We focused on a more confident and informed decision-making.

Honest product positioning: Set realistic expectations before users started migrations, which reduced frustration from discovering issues too late in the flow.

Scalable frameworks and support enablement: Aligned support, engineering, and design around one unified strategy.

Support teams used the documentation directly in ticket replies

Soft limits became a go or no-go checkpoint for enterprise migrations

Users had clearer guidance before attempting large data moves

Product teams reused the framework for other Cloud tools

The tracker template was adopted by other teams building guardrails

Engineers used it as a pre-release checklist for new builds

Jira C2C moved closer to launch readiness with fewer unknowns and escalations

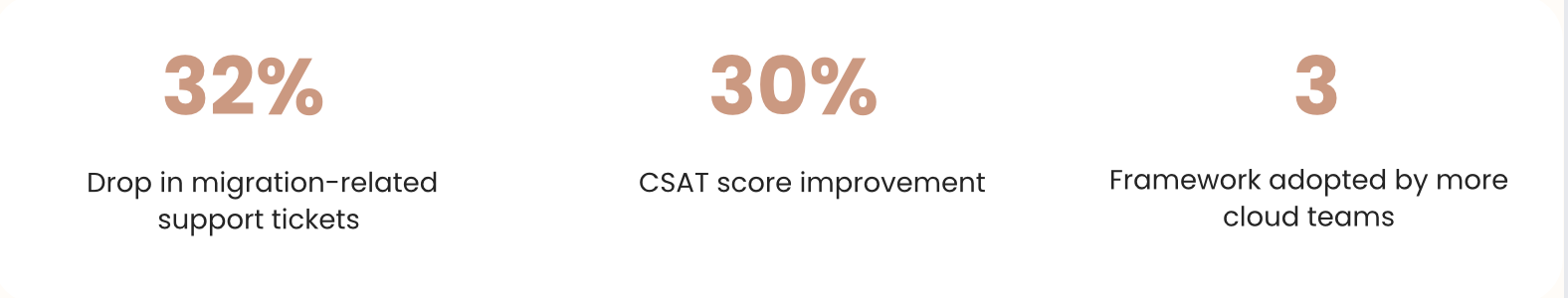

Impact

Even before formal UX metrics were available:

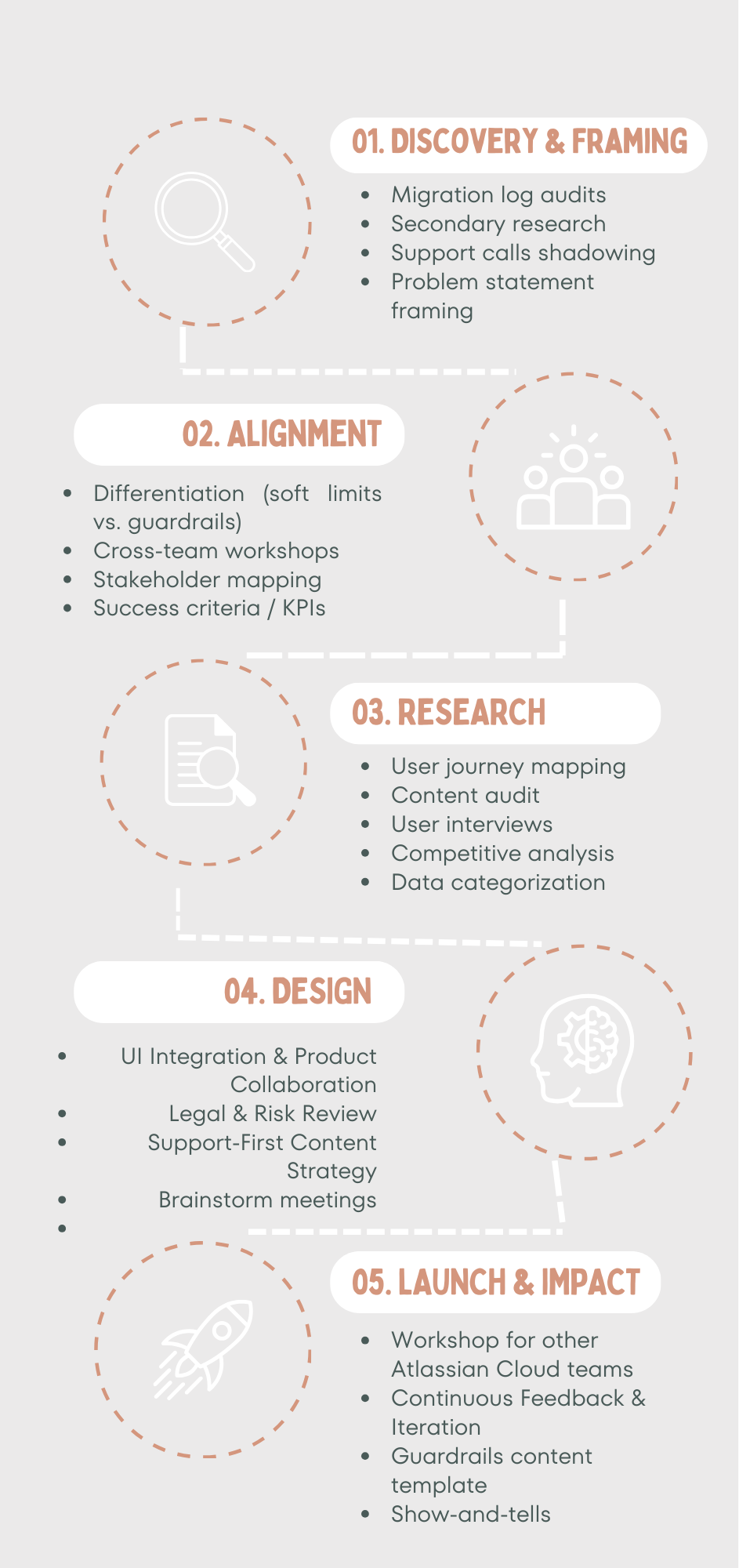

Design process

The process was non-linear, we had to revisit and refine our approach as new insights came up. This section goes into detail on how the research system was built, how patterns emerged, and how the soft limits model took shape.

Research & inputs

(How I built understanding)

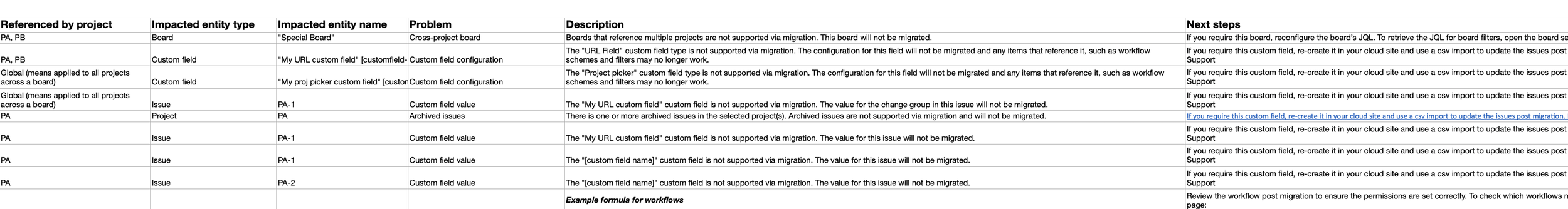

When I joined the project, there was no single, reliable source of user data for Jira C2C. The product was in beta, and information about failures was scattered across support, engineering, PMs, and community channels. I focused on making sense of the signals already present across the system that reflected real operational issues.

I partnered with the lead content designer from the Data Center platform team, who had already worked on guardrails for their products. Their experience gave me valuable context on what success looked like, and how we could adapt it for the Jira C2C use case.

With that context, I set up weekly syncs with engineering, PMs, product design, and support to gather whatever existing user data we had. This included:

Support call recordings and ticket escalations

Engineering logs and Jira tickets

PM inputs from customer emails and community posts

Existing user interviews and Beta feedback

At this stage, the problem was not lack of data. The problem was lack of structure.

Key findings

Each source answered a different part of the problem:

Migrations often failed without clear reasons or recovery steps

Interviews showed user hesitation due to lack of migration status reporting, so they couldn’t tell what had succeeded or failed

Support data showed where users were struggling and how issues were being explained

Engineering logs and UI feedback were not actionable for users

Together, these inputs gave us a system-level view of both technical behaviour and user perception.

Data categorization & prioritization

(Creating order from scattered inputs)

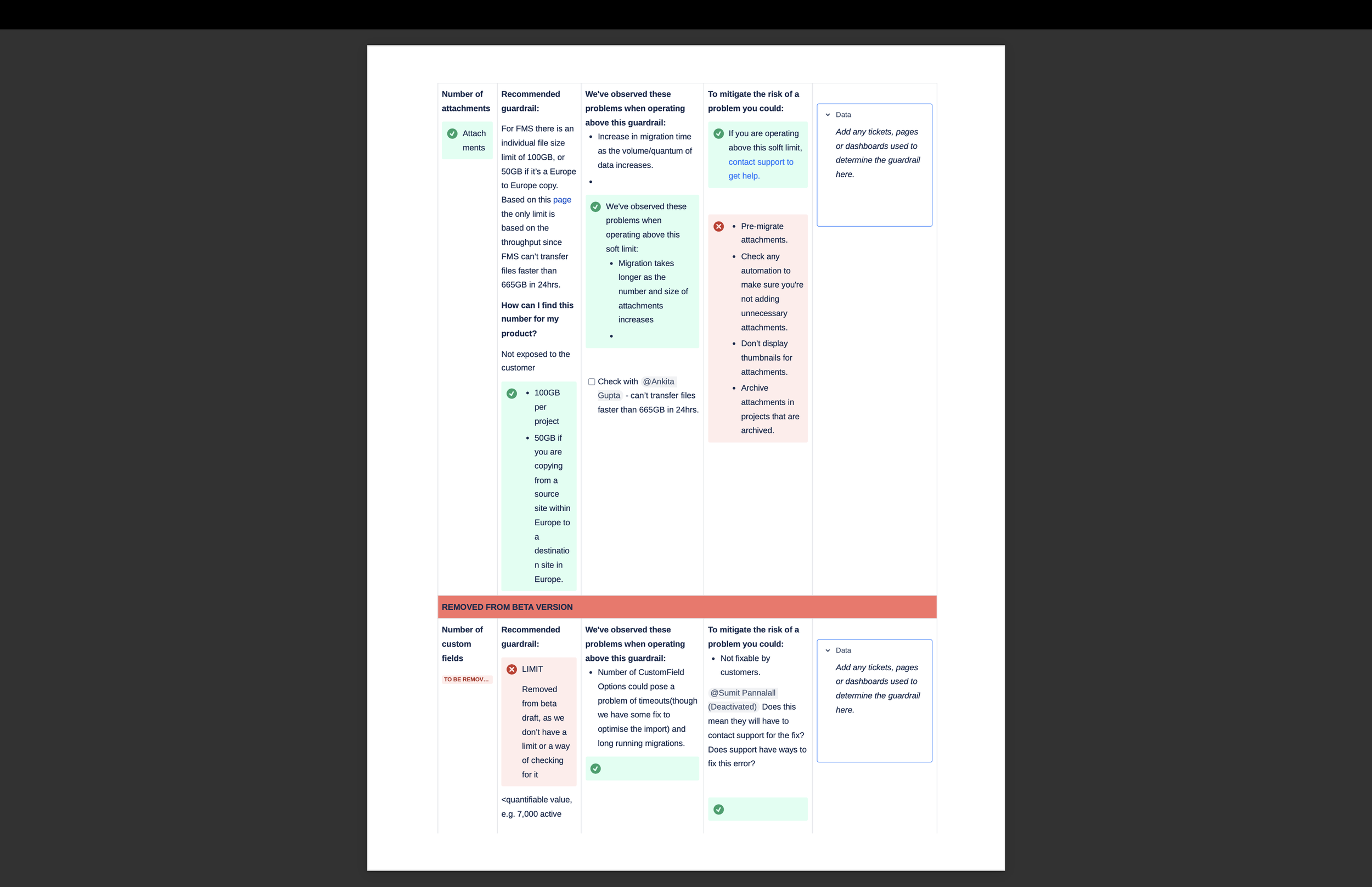

To make sense of these inputs, I created a shared Confluence tracker that acted as a living repository of user issues, Jira tickets, support workarounds, and internal notes.

I spent time with support teams to understand:

what kinds of questions users were asking

how they were responding

what workarounds they were using

These conversations revealed recurring user pain points and showed that support teams lacked clear product capability information and consistent language.

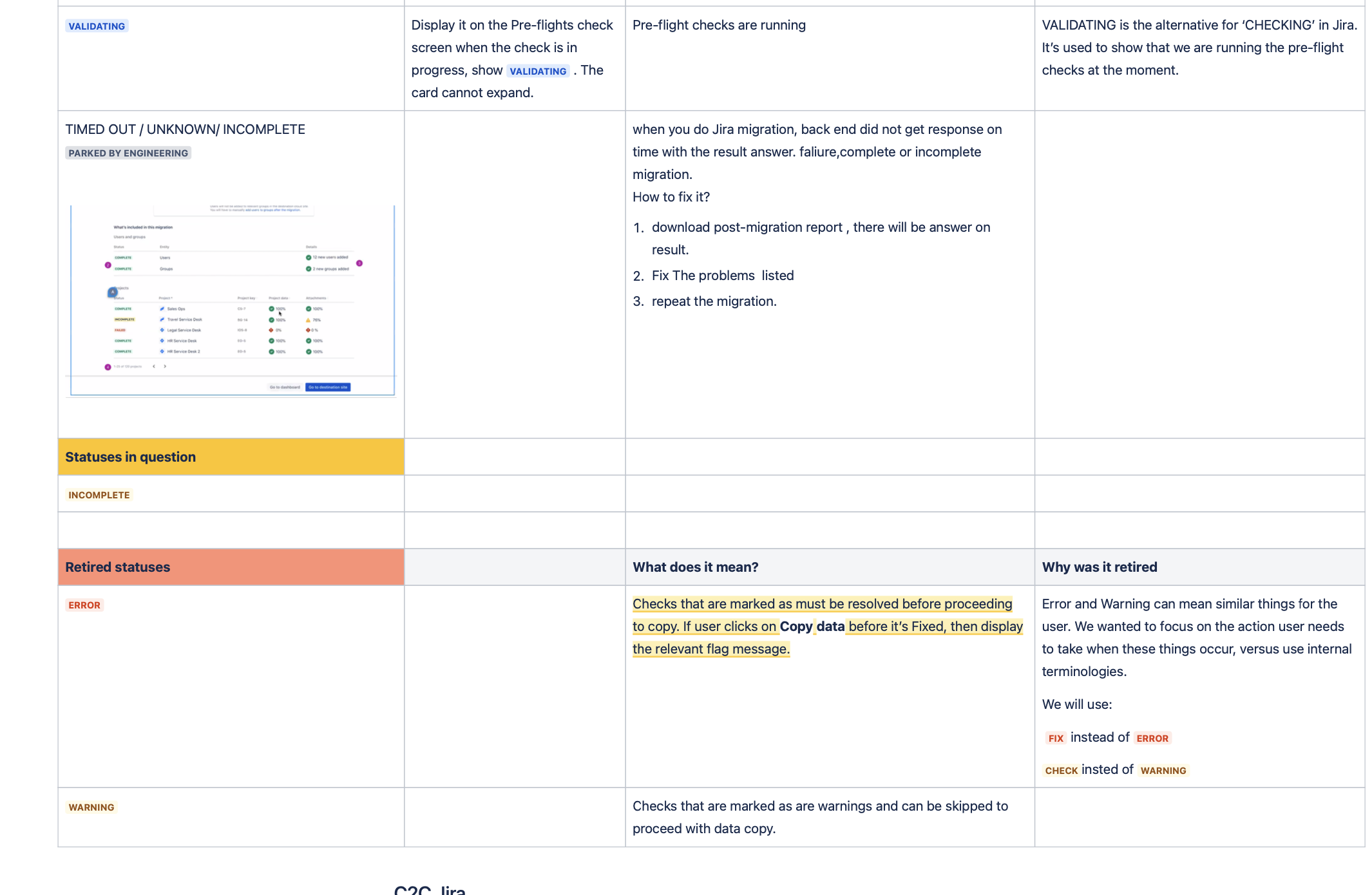

Once the tracker had enough data, I started categorising issues based on:

affected components (projects, fields, comments, attachments, etc.)

urgency and frequency

whether a workaround existed

and whether the issue was systemic, edge case, high risk

This helped us establish design priorities, by showing which failures were both frequent and impactful, and which ones were not yet reliable enough to publish externally.

Identifying where users needed guardrails

(What I decided and why)

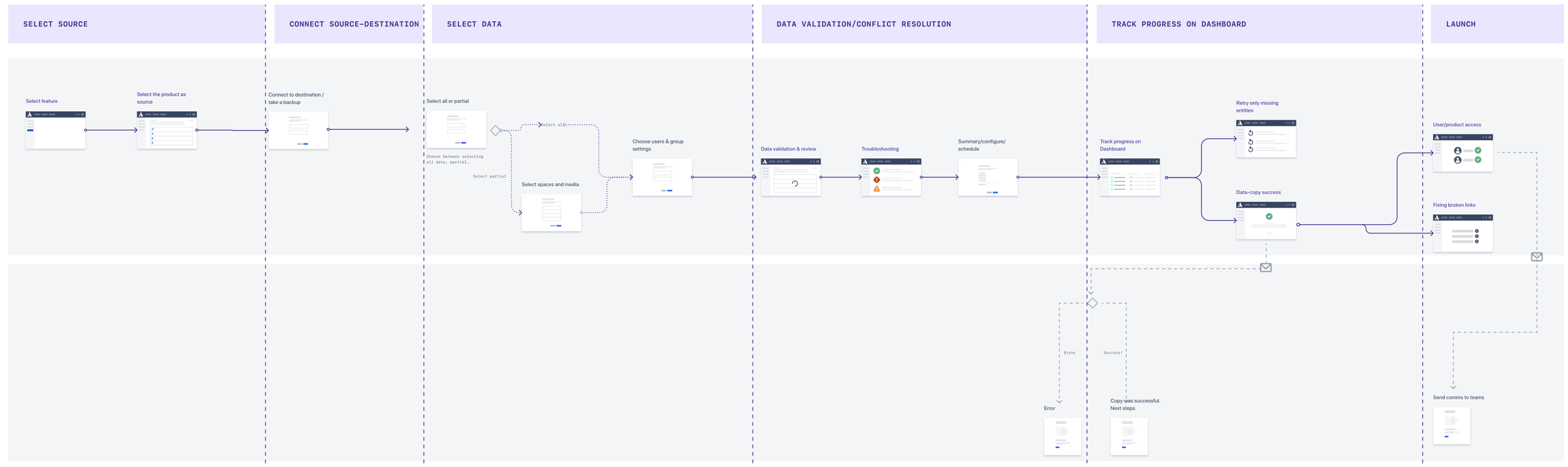

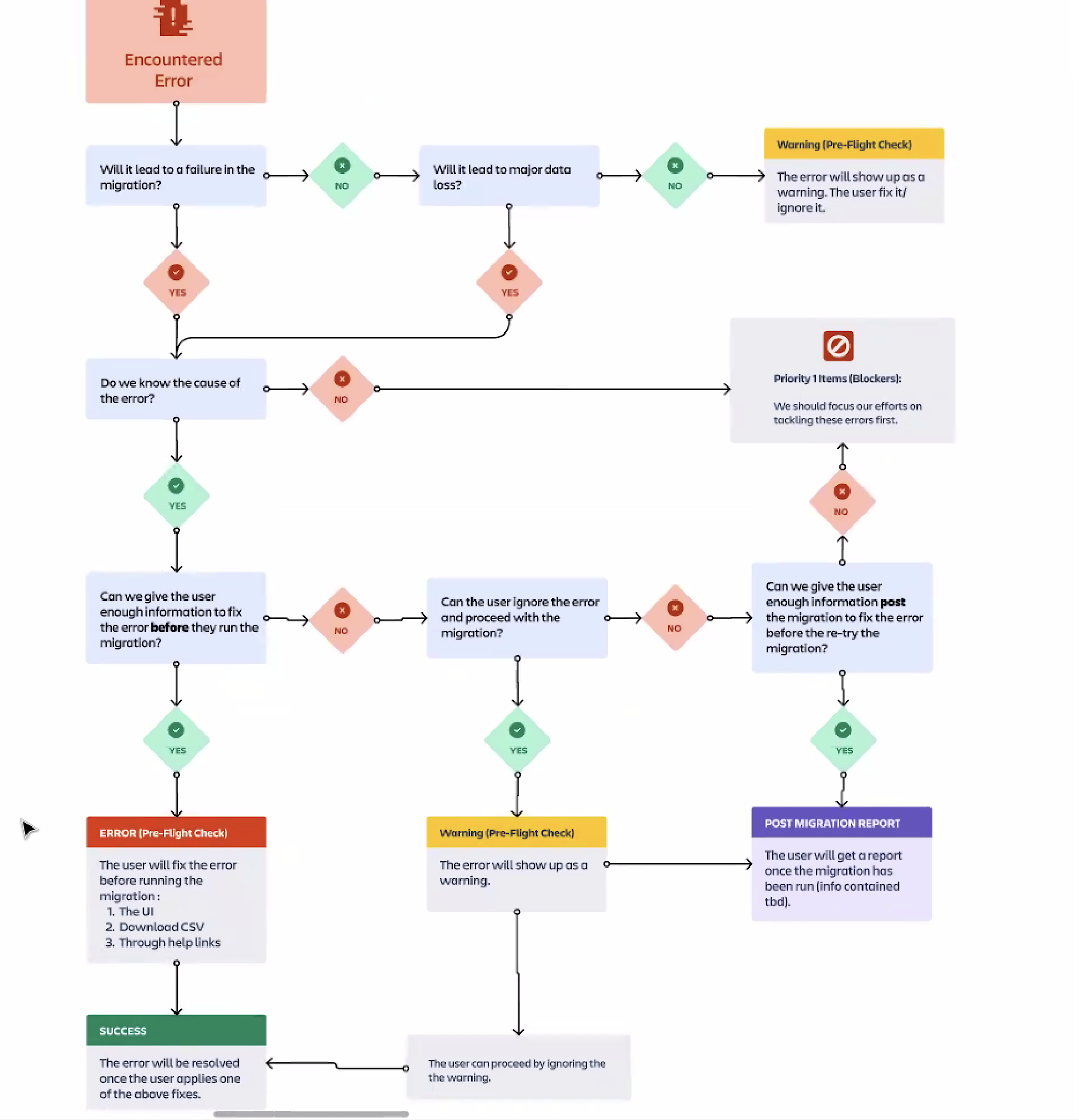

With priorities in place, I worked with the product designer and engineers to map the end-to-end migration journey.

We focused on:

where users were most likely to fail

where failures were invisible or hard to recover from

and where support teams had no clear guidance

This mapping revealed a consistent pattern: most serious breakdowns were happening before users even started migrating, during planning and decision making.

Users were making high-risk choices without knowing:

whether their data size was supported

which components were likely to fail

or what “success” looked like in a Beta product

Aligning with the Journey

We were clear that aligning with the user journey will enable:

customers to make informed decisions about Jira C2C before migrating

support teams to provide consistent, aligned responses to data-related issues

engineering to track known problem areas and log coverage

designers and PMs to reference data limits when planning roadmap or UI updates

This allowed us to ship a meaningful first version while leaving room for iteration.

Challenges in alignment

Initially, we faced some pushback from PMs and engineering, who had competing development priorities. To counter this, we presented:

migration failure reports from support

documentation feedback from users

screenshots from the Atlassian Community

internal business goals around cloud adoption and enterprise migration success

This helped shift the guardrails effort from “nice-to-have” to strategic priority for the next quarter.

Identifying the limits of existing guardrails

(Conceptual tension)

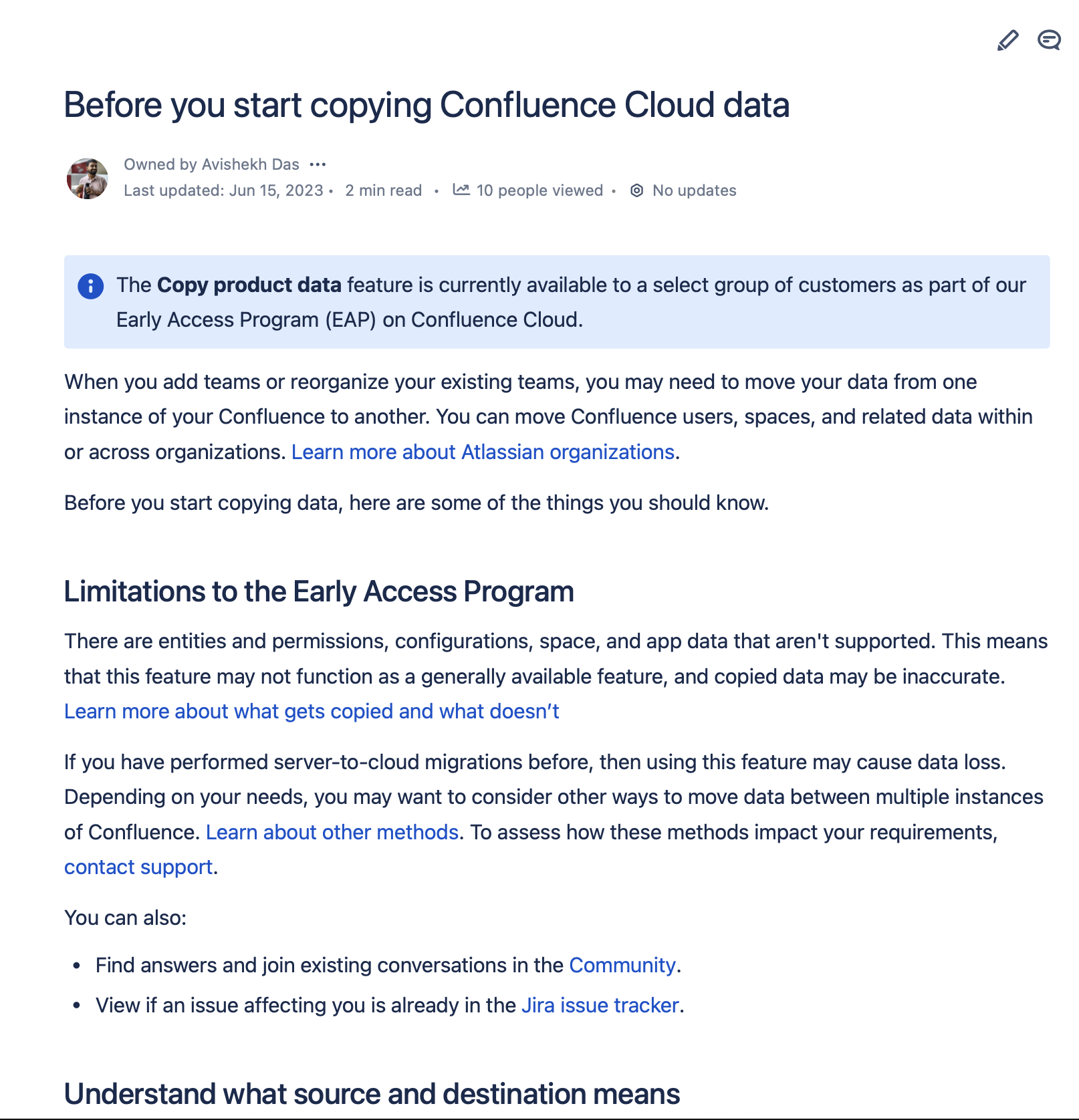

As we started shaping the documentation, I realised that our positioning did not fully align with existing guardrails used across Atlassian.

The Data Center guardrails model focused on long-term system health and stability in on-prem environments. Our problem space was cloud migrations, where users were trying to move large volumes of data through a system that was still evolving.

This mismatch made it difficult to reuse the same mental model and language.

I revisited the original guardrails pitch and discussed this gap with content design, support, product design, engineering, and later legal.

It became clear that although both approaches aimed to mitigate risk, they served different goals:

Data Center guardrails were about protecting system stability over time

Our migration limits were about enabling successful and efficient data movement

Guardrails vs. soft limits: Laying down the distinction

Based on these discussions, we formally separated the two concepts:

Guardrails address long-term system health and maintenance in on-prem environments. The desired outcome is system-wide stability.

Soft limits focus on enabling successful and efficient data movement in cloud migrations. The desired outcome is increased migration effectiveness.

This distinction shaped:

the terminology used across support macros and documentation

collaboration with legal on appropriate wording

internal alignment across engineering, PMs, and support

Following legal guidance, I proposed the term soft limits to reflect advisory, in-beta guidance rather than hard technical constraints.

Shaping the soft limits

With the conceptual model in place, the next challenge was defining which limits were feasible to publish and how they should be structured.

We shortlisted what made it into version1 based on:

migration components most likely to fail

features not fully supported or prone to inconsistency

common failure themes with known workarounds

areas where users had no visibility and support had no clear answers

Some potential limits were put on hold due to:

limited user data

no existing fix or workaround

legal sensitivity

Documentation & design

Once structure and messaging were clear, I translated the soft limits into usable and scalable documentation.

We reused the platform team’s guardrails layout to maintain cross-product consistency, adapting tone and hierarchy for the in-beta, advisory context.

I also partnered with the product designer to integrate soft limits into the product flow by:

identifying key decision points

adding in-product links and warnings

aligning onboarding with documentation

Support-First Content Strategy

Because the majority of user issues were showing up in support escalations, we created an extensive FAQ section, based on real user queries and answers already being handled manually by support.

This not only reduced the burden on support teams but allowed us to scale their responses through documentation

This ensured users encountered the guidance at the moment it mattered.

Launch & rollout

Externally:

email to Beta users

community announcement

in-product references

inclusion in Atlassian documentation hub

Internally:

support and engineering walkthroughs

regular handoffs

show-and-tells across cloud teams

Closing reflection

This project closed a critical trust gap between product capability and user expectation.

More importantly, it showed how content design can shape product behaviour, support workflows, and long-term system clarity in complex, evolving platforms.